By Ana E. Diaz, PE - This essay addresses Wayne Channon’s concerns about variability in dressage judging ("Who Moved My Cheese?" Eurodressage, 04/10/2017). The essential idea I present here today is that the variability seen in dressage scoring can be easily quantified and resolved by using well-understood and well-accepted mathematical tools that are readily available today.

Mr. Channon describes inconsistencies seen in dressage judging and then makes some (rather complicated) suggestions for improvement. Based on the article, it also seems that the IDOC (International Dressage Officials Club) is still not convinced change is needed. So perhaps this is an indication that some ground still has to be explored so that all stakeholders, competitors and officials can see a common path toward mutually beneficial change.

I propose that it doesn’t have to be complicated. And that keeping things simple helps to build a base of common understanding. When trying to eliminate systemic flaws in any process, one must first define the problem well enough such that all parties can agree that a problem actually exists. According to Efrat Goldratt-Ashlagg’s “Layers of Resistance,” (Ch. 20 – Theory of Constraints Handbook), organizations must go thru several stages before they arrive at mutual consensus:

- They disagree on the problem:

----- There is no problem

----- Not ‘my’ problem

----- The problem is out of my control - They disagree on the solution:

----- Disagreement on the direction for the solution

----- Disagreement on the details of the solution

----- Yes, but….(the solution has negative ramifications (for me) - They disagree on implementation of the solution:

----- Yes, but…(we can’t implement the solution)

----- The solution holds risk (for me)

----- “I don’t think so” (fear) - True Buy-in

While these concepts may seem basic, they are frequently ignored and thus organizations embark of “improvement programs” that don’t produce the promised results.

So, how would one go about reducing variability? First, we establish a baseline to quantify variability BEFORE embarking on any improvement program. A baseline is used to determine whether an “improvement program” actually delivers any real change. Only by creating an inventory of the dimensions that give rise to variability can we document the extent of the problems before trying to eliminate them and allows us to compare Before/After.

So, how do we establish this baseline? Without dwelling on a lot of mathematical lingo, there are statistical tools such as Measurement System Analysis (MSA), Inter-rater Reliability, Gage Repeatability and Reproducibility (Gage R&R), and ANOVA (Analysis of Variance) that are robust, well-accepted methods. These tools are widely used to describe process robustness in many fields.

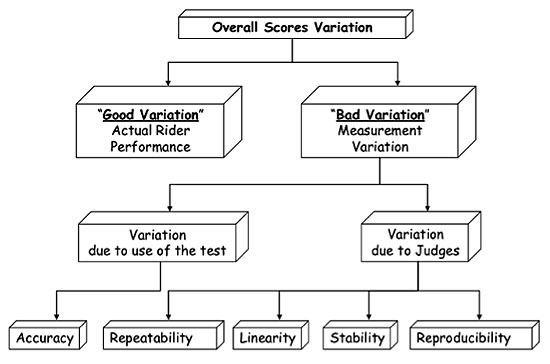

Conceptual representation for sources of variability in dressage judging

Not all variability is bad, so we should not attempt to eradicate it. “Good Variability” is the variation in scores that separates good riders, from better riders from the best riders, and determines rider placing.

“Bad Variability” is measurement system error. It is “noise” and creates the variation that “muddies up” the measurement.

Mr. Channon brings up the terms of “Accuracy” and “Precision” in this context, but what do these concepts really mean as applied to dressage judging?

Accuracy is a measure of how close a measurement approaches the “true value” or bulls-eye in our target. As applied to dressage, the “true value” is a standard for performance as defined by the rulebook. It is this standard to which the rider is held, and against which a judge evaluates the rider.

Precision defines measurement error. It measures how closely individual measurements agree with each other. Using the archery analogy, precision measures how broad a spread there is in our arrows.

If the “standard” by which to evaluate a dressage movement is not clearly defined (and used), then error is introduced because we have a “moving target.” This happens when judges hold differing opinions or have varying interpretations of the standard.

There has been lots of debate about “judging to the rulebook” in dressage. If scoring variability is truly arising from judges deviating from the rulebook, this hypothesis can be put to statistical tests. No need to reinvent the wheel. The tools are:

- The Kappa Statistic: Measures if the judges got the order of rider placing the same.

- Kendall's Correlation Coefficient: Measures if multiple judges gave the same mark for a movement

- Kendall’s Coefficient of Concordance: Measures how well the judges adhere to the standard.

Without boring readers with lots of mathematical mumbo-jumbo I hope readers can better appreciate that we have the tools readily available today to measure variability in dressage scoring. All it takes is having the will to embrace these concepts and submit some popular opinions to the test of statistical rigor.

Using these tools, we can investigate whether:

- There are clear standards against which to judge;

- Whether those standards are consistently applied;

- Whether there is repeatability in scoring;

- Whether there is reproducibility in scoring (do multiple judges give the same mark?);

- Whether the variability arises from the different placement positions of the judges;

- Whether there is variability in the application of the scale.

I could write at length about statistics, so hopefully I have not bored the gentle reader. My goal is that the dressage community learns about these tools. Commercial software packages readily allow us to compute these measures. These are robust statistical tools that have been well studied and applied in science, industry, and medicine for over 70 years. Dressage needs only to adopt them.

The FEI has the opportunity to lead the pack of subjectively judged Olympic sports and embrace these tried and true technologies. We don’t need to reinvent statistical methodology to improve judging in dressage.

by Ana E. Diaz

Ana E. Diaz, PE is a practicing engineer who spent 10 years of a long career reducing variability and improving product quality at a $30B global manufacturing company. She taught Operations Management to MBA students and is currently adjunct faculty at Widener University’s School of Engineering.